ComMU :

Dataset for Combinatorial

Music Generation

Music Generation

Introduction

Related Work

Dataset Collection

Metadata

Generation Process

Evaluation

Conclusion

NeurIPS 2022

We have made an exciting breakthrough in AI Music Generation, including a refined dataset and novel methodology. Rich metadata corresponding to a specific MIDI sample (note sequence) makes it possible for the AI model to train diverse representations and generate controllable, high quality music. The final combination of generated samples leads to a complete song, which is why we call it Combinatorial Music Generation!

The outcome of the research was accepted at the top-tier AI conference NeurIPS 2022. Key materials, including code, paper, and a demo page, are listed below. In particular, on the Demo page, you can directly listen to the generated AI samples and check out the supplementary video.

Introduction

This research proposes a process for combinatorial music generation, which mimics the human composition convention on homophony music. It presents ComMU, a symbolic music dataset containing 11,144 MIDI samples composed by professional composers with 12 corresponding metadata, to enable sophisticated control over the generating process. This dataset allows for the creation of diverse music close to human creativity, leveraging numerous combinations of metadata and note sequences.

Related Work

Combinatorial music generation extends conditional music generation by generating a track with a given set of metadata. It compares to existing works such as MuseNet and FIGARO, and discusses the differences between their approaches and the MMM model, which takes instruments, bpm, and the number of bars as conditions.

When it comes to symbolic domain in music generation, ComMU is the first dataset to separate the instrument from the track-role, and is the only dataset to provide track-role information. It also has rich metadata such as genre, instrument, key, and time signature, as well as chord information for the harmony of track-level composition. This makes it distinct from other MIDI-based datasets such as Lakh MIDI Dataset, MAESTRO, ADL Piano MIDI, MSMD, GiantMIDI-Piano, and EMOPIA.

Dataset Collection

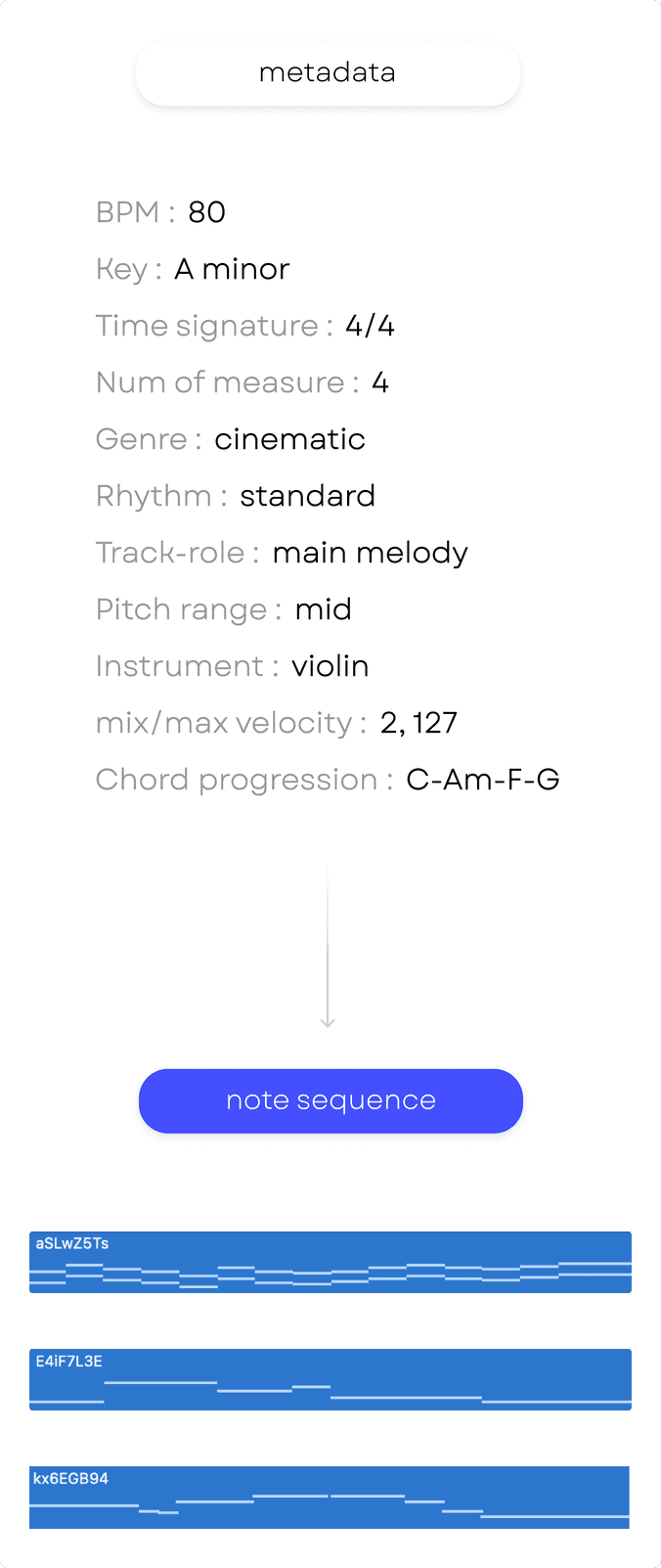

Fourteen professional composers were split into two teams for a 6-month project to create 11,144 MIDI samples with accompanying metadata, with one team creating composition guidelines and the other team composing the samples according to those guidelines.

Metadata

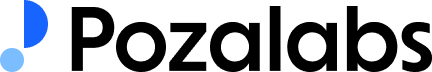

In addition to metadata that defined in previous works (e.g. bpm, key, instrument, time signature, etc.), three new metadata are defined in this paper, including two unique metadata (Track-role and Chord-progression).

We chose new age and cinematic genres for our dataset. New age is a melodious genre with keyboard and small instruments. Cinematic is a big genre with orchestra and classical instruments like strings in charge of the melody and accompaniment.

We classified multi-track music into main melody, sub melody, accompaniment, bass, pad, and riff. This is a configuration of multi-track which copied real composing process. This can improve the capacity and flexibility of automatic composition and give more detailed conditions for music generation.

Chord progression is the set of chords used in a sample. More chord quality leads to better harmony and diversity by teaching the model many possible melodies associated with the same chord progression. Furthermore, Chord quality of ComMU not only has major (maj), minor (min), diminished, augmented, and dominant but also incorporates sus4, maj7, half-diminished, and min7. Extended chord quality makes it easier to understand the precise intention of composition and leads to the improvement in harmonic performance.

Generation Process

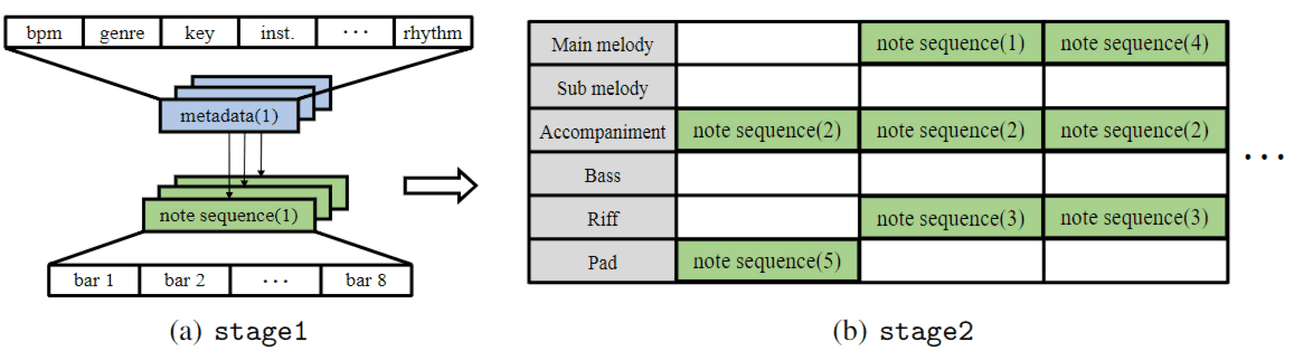

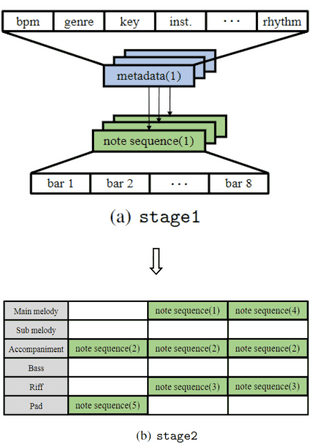

The whole generation process is divided into two stages.

- Note sequence is generated from a metadata.

- Noted sequences are combined and a complete music is created.

Combination process is made according to appropriate track and time, both horizontally and vertically.

Evaluation

The experimental results regarding the generated music sequence were analyzed in three parts.

Objective Metric include pitch control, velocity control, harmony control, and diversity. When it comes to controllability, that of pitch, veolcity, and harmony is measured by the ratio of notes that meets the specific range or corresponding key. And diversity was measured as the average pairwise distance generated from the same metadata.

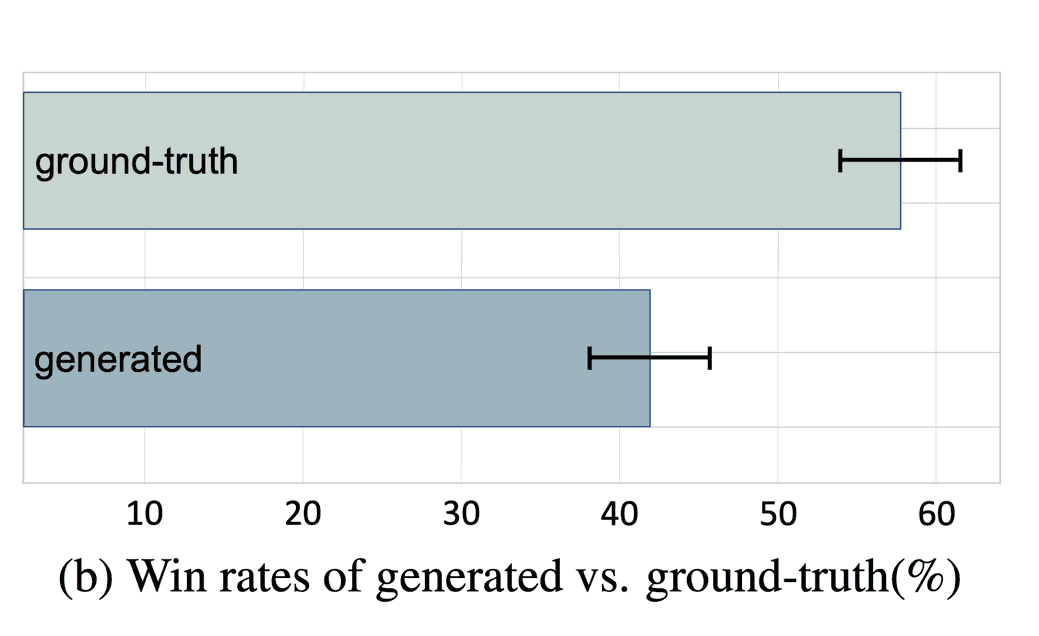

40 anonymous composers compared generated samples to ground-truth samples with the same metadata. Win rate of generated samples are over 40%, which means the closeness to the human level.

Conclusion

We presented ComMU, a dataset for combinatorial music generation stage1, consisting of 12 metadata matched with note sequences manually created by composers. Through Quantitative and qualitative experiments, we demonstrated high-fidelity, controllable, diverse music samples and the efficiency of unique metadata. Based on these results, we expect ComMU opens up wide range of future research in automatic composition, being closer to human level.